Last Updated: 2019-07-20

Author: Jeremy Jacobson

The purpose of this codelab is to familiarize you with common concepts and terms one encounters while programming by placing them in an historical context. Along the way, we will describe at a high level how modern computers work. At the end, you will look at the computer in front of you in a new light!

What you'll learn

- Binary and Hexadecimal numbers

- Characters, ASCII, Unicode

- High vrs low level programming languages

- Compiled vrs interpreted languages

The road leading to today's computers goes back millenia. Historically, a computer was a person who makes calculations, especially with a calculating machine.

What is a computer?

For this, one should take a field trip to the Arithmeum.

Earliest examples of physical memory (The third floor of the Arithmeum)

What is the simplest way to physically represent numbers such that we may physically manipulate them in order to do arithmetic?

Counting fingers!

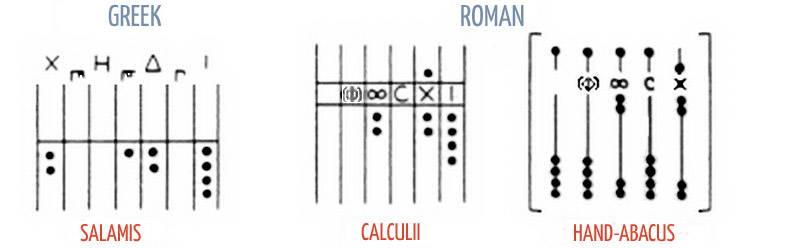

Others, dating back thousands of years, are:

- Marked stones called calculi, or marked bones

- Incan Rope counting, called Quipu

- The Abacus (See Feynmann versus the Abacus, as told by R. Feynmann)

- The same story, as told by the Abacus

The second floor of the Arithmeum (early mechanical calculators)

What do you mean mechanical?

Binary arithmetic using rockers

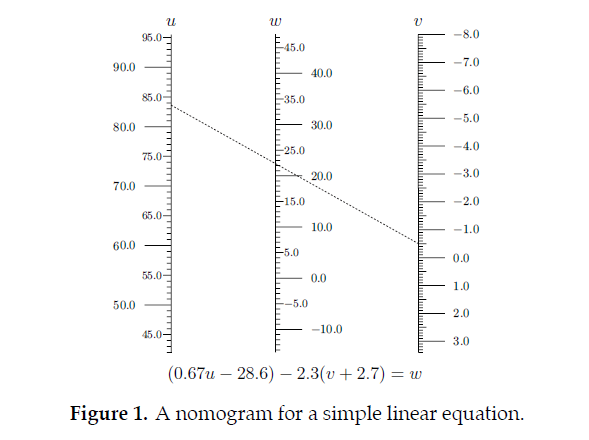

Slide rules and Nomograms

- A nomogram may be thought of as a generalized slide rule. The Greek word for law is nomos

- It was set up to solve just one equation that would've taken a lot of time on a slide rule, or required scales that no slide rule had

- For instance, to get the power output of a steam engine requires a nomogram with eleven different lines on it!

What do nomograms have to do with computing today?

Neural networks power deep learning and are changing a paradigm for computing that has been dominant for decades. The name is a misnomer as they are not networks but in fact parameterized families of mathematical functions. Specifically, they are piecewise linear functions. There are many interesting parallels between generalized slide rules and neural networks:

- They both represent multivariable functions on a plane

- They both offer an arbitrarily good approximation to a wide class of functions when defined on a bounded domain

- They both find ‘simple' solutions to complex problems: evaluating a neural network amounts to repeatedly taking sums, scalar multiples, and max(0,x); evaluating a nomogram requires that one draw a line.

- They both obtain this simplicity by solving problems in two phases: a difficult ‘model discovery' phase (e.g. training the neural net or finding the required nomogram), and a simpler evaluation phase.

So in these respects, computing has returned to a theme that, before electronic computers and their speed made it unnecessary, was very important for calculations. As Moore's law is now essentially over, one is led to wonder: perhaps these parallels reflect the fact that electronic computers now face the same difficulties humans have always encountered?

The first floor of the Arithmeum (four-species mechanical calculators and more)

From the Arithmeum's webpage:

The first four-species calculating machine, which means that it is able to perform all four basic operations of arithmetic, was built by Gottfried Wilhelm Leibniz. This machine can be viewed on the first floor,

- Leibniz completed his calculator in 1694

- Mechanical calculators continued to evolve

- If you took a statistics course at a university, even as late as the 1970's, you may have found yourself using the sort of mechanical calculator pictured below

Silicon Microchip Computers

Excerpts from the Arithmeum's website (link appeared earlier):

"The 1970s...were the years that saw mechanics being increasingly replaced by electronics...

...A 300-year period of development of ever more complex mechanical machines was coming to an abrupt end"

What changed?

- A transistor is a device that regulates current or voltage flow and acts as a switch or gate for electronic signals

- Just like the wooden rockers we saw earlier, but switching at the speed of light!

- Transistors are the basic elements in integrated circuits (IC), which consist of very large numbers of transistors interconnected with circuitry and baked into a single silicon microchip.

- Von Neumann architecture changed the computing paradigm.

What is the most limiting aspect of the design of all the calculators we have seen so far?

Von Neumann architecture

What follows are excerpts from a non-technical article that summarizes John Von Neumann's fascinating life and achievements. His wife, Klara Dan, was one of the world's first computer programmers.

Key points:

- The principal feature of a Von Neumann computer is that the program and any data are both stored together, usually in a slow-to-access storage medium such as a hard disk, and transferred as required to a faster, and more volatile storage medium (RAM) for execution or processing by a central processing unit (CPU).

- Since this how practically all present day computers work, the term "von Neumann architecture" is rarely used now, but it was in common parlance in the computing profession through to the early 1970s.

- When von Neumann proposed this architecture in 1945, it was a radical idea. Prior to then, programs were viewed as essentially part of the machine, and hence different from the data the machine operated on.

Digital computers understand the language of 0's and 1's called binary.

Watch the following three minute video at https://youtu.be/GcDshWmhF4A before reading more. In the video you will learn how a simple mechanical system of wooden rockers can be used to do binary arithmetic. Then note:

- Instead of rockers, in a modern digital computer we have transistors acting as switches, 1 for high voltage level and 0 for low voltage level

- Computers use binary because they can be represented by a transistor that is relatively easy to fabricate in silicon and millions of them can be put on a chip

- Each time we add another rocker, we double how many numbers can be represented, and this growth is exponential.

What is the biggest number represented in the marble adding machine?

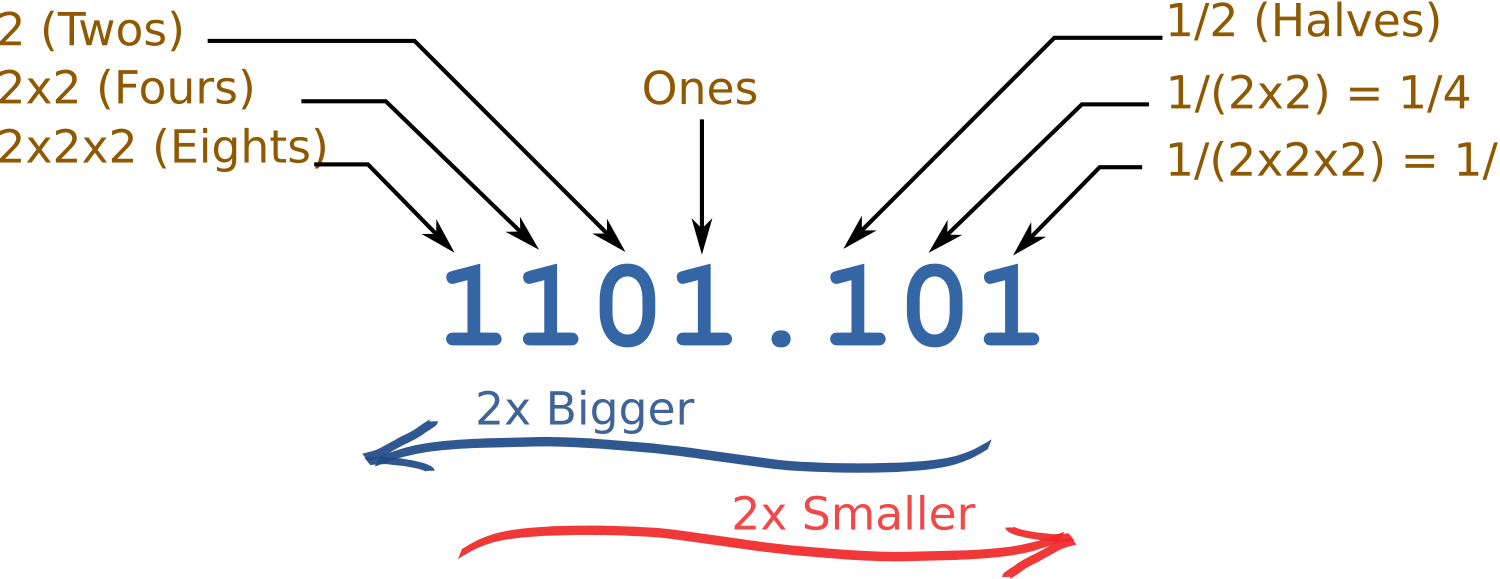

What is binary?

- A binary number is a number written using only digits 0 and 1

- A bit is a single binary digit. For instance, 101 has three bits and 10 two bits

- A group of eight bits is called an 8-bit byte, and sometimes is called simply a byte

- 0 represents zero, 1 represents one.

- 10 represents two

- 100 represents 4

- 1000 represents 8...

What binary number represents 3?

Machine code or binary code is the term used to describe any binary instruction which the computer's CPU will read and execute, e.g. 10001000 01010111 11000101 11110001 10100001 00010110. Each instruction performs a very specific task, such as adding two binary numbers together.

In the very early days of computing, programming instructions were provided in this language, which is difficult for human beings to read and write.

How many distinct numbers are represented by a byte?

Chess Board Legend (Problem 1)

There is an old Indian legend about a King who was challenged to a game of chess by a visiting Sage. The King asked "what is the prize if you win?"

The Sage said he would simply like some grains of rice: one on the first square, 2 on the second, 4 on the third and so on, doubling on each square. The King was surprised by this humble request.

The Sage won.

Answer the questions below using a bash terminal.

How many grains of rice should the sage receive? (Answer using Bash)

A billion grains of rice is about 25 tonnes (1,000 grains is about 25g). Converting from grains of rice into tonnes, to fill all 64 squares in a chess board the king would need about 460 billion tonnes of rice!

In the legend, the Sage tells the King that he doesn't have to pay the debt at once, but can pay him over time by serving rice to pilgrims every day until the debt is paid off.

What is the output of echo $((2**64))?

How does bash represent integers on an Ubuntu instance on AWS?

If you are having trouble answering the questions above, a more detailed explanation which shows how to make the necessary calculations in bash may be found in this article.

Summary

In the table below you will find the exact count of the number of grains of rice as the squares increase.

Table with grain of rice count on chessboard

Square | Grains | Total |

1 | 1 | 1 |

2 | 2 | 3 |

3 | 4 | 7 |

4 | 8 | 15 |

5 | 16 | 31 |

6 | 32 | 63 |

7 | 64 | 127 |

8 | 128 | 255 |

9 | 256 | 511 |

10 | 512 | 1023 |

11 | 1024 | 2047 |

12 | 2048 | 4095 |

13 | 4096 | 8191 |

14 | 8192 | 16383 |

15 | 16384 | 32767 |

16 | 32768 | 65535 |

17 | 65536 | 131071 |

18 | 131072 | 262143 |

19 | 262144 | 524287 |

20 | 524288 | 1048575 |

21 | 1048576 | 2097151 |

22 | 2097152 | 4194303 |

23 | 4194304 | 8388607 |

24 | 8388608 | 16777215 |

25 | 16777216 | 33554431 |

26 | 33554432 | 67108863 |

27 | 67108864 | 134217727 |

28 | 134217728 | 268435455 |

29 | 268435456 | 536870911 |

30 | 536870912 | 1073741823 |

31 | 1073741824 | 2147483647 |

32 | 2147483648 | 4294967295 |

33 | 4294967296 | 8589934591 |

34 | 8589934592 | 17179869183 |

35 | 17179869184 | 34359738367 |

36 | 34359738368 | 68719476735 |

37 | 68719476736 | 137438953471 |

38 | 137438953472 | 274877906943 |

39 | 274877906944 | 549755813887 |

40 | 549755813888 | 1099511627775 |

41 | 1099511627776 | 2199023255551 |

42 | 2199023255552 | 4398046511103 |

43 | 4398046511104 | 8796093022207 |

44 | 8796093022208 | 17592186044415 |

45 | 17592186044416 | 35184372088831 |

46 | 35184372088832 | 70368744177663 |

47 | 70368744177664 | 140737488355327 |

48 | 140737488355328 | 281474976710655 |

49 | 281474976710656 | 562949953421311 |

50 | 562949953421312 | 1125899906842623 |

51 | 1125899906842624 | 2251799813685247 |

52 | 2251799813685248 | 4503599627370495 |

53 | 4503599627370496 | 9007199254740991 |

54 | 9007199254740992 | 18014398509481983 |

55 | 18014398509481984 | 36028797018963967 |

56 | 36028797018963968 | 72057594037927935 |

57 | 72057594037927936 | 144115188075855871 |

58 | 144115188075855872 | 288230376151711743 |

59 | 288230376151711744 | 576460752303423487 |

60 | 576460752303423488 | 1152921504606846975 |

61 | 1152921504606846976 | 2305843009213693951 |

62 | 2305843009213693952 | 4611686018427387903 |

63 | 4611686018427387904 | 9223372036854775807 |

64 | 9223372036854775808 | 18446744073709551615 |

So what, what is so great about 64 switches being able to represent all the numbers from 0 up to 18446744073709551615?

We can use them for more than representing numbers...they can represent characters like A or !

A character is the smallest possible component of a text, for instance A or B or / and a character is represented on a screen or on paper by a set of graphical elements that's called a glyph.

The glyph for an uppercase A, for example, is two diagonal strokes and a horizontal stroke, though the exact details will depend on the font being used. Most Python code doesn't need to worry about glyphs; figuring out the correct glyph to display is generally the job of a GUI toolkit or a terminal's font renderer.

ASCII

From https://docs.python.org/3.0/howto/unicode.html

In 1968, the American Standard Code for Information Interchange, better known by its acronym ASCII, was standardized.

- ASCII defined numeric codes for various characters, with the numeric values running from 0 to 127.

- For example, the lowercase letter ‘a' is assigned 97 as its code value.

- The ASCII value of the uppercase letter 'A' is 65.

- The ASCII value 0 is the symbol 'NUL' also known as the null byte.

- Codes 0 through 31 and 127 (decimal) are unprintable control characters. Code 32 (decimal) is a nonprinting spacing character.

- Codes 33 through 126 (decimal) are printable graphic characters.

ASCII was an American-developed standard, so it only defined unaccented characters. There was an ‘e', but no ‘é' or ‘Í'. This meant that languages which required accented characters couldn't be faithfully represented in ASCII. (Actually the missing accents matter for English, too, which contains words such as ‘naïve' and ‘café', and some publications have house styles which require spellings such as ‘coöperate'.)

Try it yourself

Write the characters of your name in ASCII.

In ASCII, everything could be stored in 7 bits. As we want to represent characters from any possible language, we will need more bits. To represent as many glyphs as possible in an efficient way, hex is used in unicode..

What is Hexadecimal?

Hex, or hexadecimal, is a number system of base 16. Hex digits are 0,1,2,3,4,5,6,7,8, 9, A, B, C, D, E, F. This number system is more commonly encountered in programming than binary.

Each hexadecimal digit maps directly into 4 bits:

0000=0,

0001=1,

0010=2,

0011=3,

0100=4,

0101=5,

0110=6,

0111=7,

1000=8,

1001=9,

1010=A,

1011=B,

1100=C,

1101=D,

1110=E,

1111=F.

Notice, given the mapping above, one hex digit is equivalent to 4 bits.

Now, lets say you have the binary sequence 1001111000001010 - it can easily be converted to hex by grouping in blocks - each block consisting of four bits.

1001 1110 0000 1010 => 9 14 0 10 which in hex becomes: 9E0A.

In particular, Hex is a neat shorthand notation for binary. Confusingly, often files consisting of hex are called binary by developers, although strictly speaking the representation is hexadecimal not binary. This is harmless since the conversion from one to the other is unambiguous.

Example

In html programming, colors are often represented by a 6-digit hexadecimal number: FFFFFF represents white, 000000 represents black, and so on.

Exercise

What is Emory's official school color for blue in 6-digit hex representation?

Conversion Table

Hex | Binary | Decimal |

0 | 0 | 0 |

1 | 1 | 1 |

2 | 10 | 2 |

3 | 11 | 3 |

4 | 100 | 4 |

5 | 101 | 5 |

6 | 110 | 6 |

7 | 111 | 7 |

8 | 1000 | 8 |

9 | 1001 | 9 |

A | 1010 | 10 |

B | 1011 | 11 |

C | 1100 | 12 |

D | 1101 | 13 |

E | 1110 | 14 |

F | 1111 | 15 |

10 | 10000 | 16 |

11 | 10001 | 17 |

12 | 10010 | 18 |

13 | 10011 | 19 |

14 | 10100 | 20 |

15 | 10101 | 21 |

16 | 10110 | 22 |

17 | 10111 | 23 |

18 | 11000 | 24 |

19 | 11001 | 25 |

1A | 11010 | 26 |

1B | 11011 | 27 |

1C | 11100 | 28 |

1D | 11101 | 29 |

1E | 11110 | 30 |

1F | 11111 | 31 |

20 | 100000 | 32 |

21 | 100001 | 33 |

22 | 100010 | 34 |

23 | 100011 | 35 |

24 | 100100 | 36 |

25 | 100101 | 37 |

26 | 100110 | 38 |

27 | 100111 | 39 |

28 | 101000 | 40 |

29 | 101001 | 41 |

2A | 101010 | 42 |

2B | 101011 | 43 |

2C | 101100 | 44 |

2D | 101101 | 45 |

2E | 101110 | 46 |

2F | 101111 | 47 |

30 | 110000 | 48 |

31 | 110001 | 49 |

32 | 110010 | 50 |

33 | 110011 | 51 |

34 | 110100 | 52 |

35 | 110101 | 53 |

36 | 110110 | 54 |

37 | 110111 | 55 |

38 | 111000 | 56 |

39 | 111001 | 57 |

3A | 111010 | 58 |

3B | 111011 | 59 |

3C | 111100 | 60 |

3D | 111101 | 61 |

3E | 111110 | 62 |

3F | 111111 | 63 |

40 | 1000000 | 64 |

41 | 1000001 | 65 |

42 | 1000010 | 66 |

43 | 1000011 | 67 |

44 | 1000100 | 68 |

45 | 1000101 | 69 |

46 | 1000110 | 70 |

47 | 1000111 | 71 |

48 | 1001000 | 72 |

49 | 1001001 | 73 |

4A | 1001010 | 74 |

4B | 1001011 | 75 |

4C | 1001100 | 76 |

4D | 1001101 | 77 |

4E | 1001110 | 78 |

4F | 1001111 | 79 |

50 | 1010000 | 80 |

51 | 1010001 | 81 |

52 | 1010010 | 82 |

53 | 1010011 | 83 |

54 | 1010100 | 84 |

55 | 1010101 | 85 |

56 | 1010110 | 86 |

57 | 1010111 | 87 |

58 | 1011000 | 88 |

59 | 1011001 | 89 |

5A | 1011010 | 90 |

5B | 1011011 | 91 |

5C | 1011100 | 92 |

5D | 1011101 | 93 |

5E | 1011110 | 94 |

5F | 1011111 | 95 |

60 | 1100000 | 96 |

61 | 1100001 | 97 |

62 | 1100010 | 98 |

63 | 1100011 | 99 |

64 | 1100100 | 100 |

If you've ever written some Python code and received a message such as UnicodeDecodeError: 'ascii' codec can't decode byte 0xff in position 6: ordinal not in range(128) then you have run into a problem with character sets, encodings, Unicode and the like.

- The Unicode standard describes how characters are represented by code points.

- A code point is an integer value, usually denoted in hex

- A Unicode string is a sequence of code points.

- The rules for translating a Unicode code point into a sequence of bytes are called an encoding.

- The rules for converting a Unicode character into ASCII are simple; for each code point:

- If the code point is < 128, each byte is the same as the value of the code point.

- If the code point is 128 or greater, the Unicode string can't be represented in ASCII encoding. (Python raises a UnicodeEncodeError exception in this case.)

The full list of code points can be found at http://www.unicode.org/charts/

What is UTF-8?

Unicode Transformation Format 8-bit (UTF-8) is a variable-width encoding that can represent every character in the Unicode character set.

Python supports writing source code in UTF-8 by default:

- \xnn - specifies a two digit hex unicode 'code point'.

- \unnnn - specifies a four digit hex unicode 'code point'.

- \Unnnnnnnn - specifies an eight-byte hex unicode 'code point'.

- The full number of digits must be used, padded with leading 0's.

Try it yourself

Write your name in Unicode (UTF-8). What is your favorite code point?

Now that we understand binary, we can finish our high level overview of how we program by describing how instructions are passed to a computer.

The genesis of programming

Early digital computers

The earliest digital computers where Konrad Zuse's Computers. In the year 1943 he gave the following definition: Computing is the deviation of result specifications to any specifications by a prescription. What does this mean? In a word, they were programmable!

In particular, they were designed to read arbitrary binary instructions from a punch tape (they should work in the binary digit system because Zuse wanted to contruct his computer with binary switching elements), and not only the numbers should be represented in a binary form, but the whole logic of the machine should work in a binary switching mechanism (0-1-principle)!

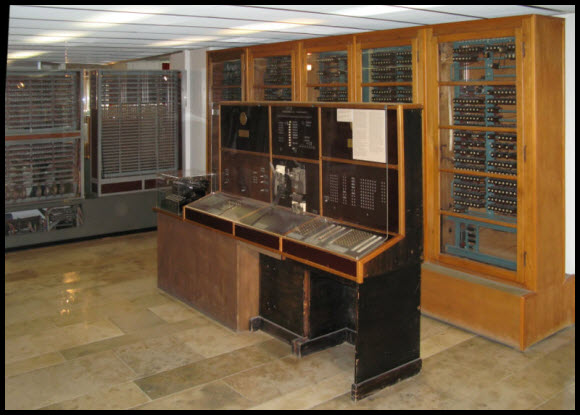

From this definition, Konrad Zuse defined the architecture of his computers Z1, Z2, Z3 and Z4. The Z4 is pictured below. It had 512 bytes of memory.

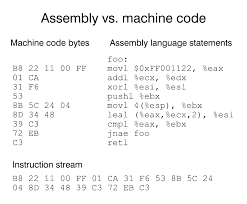

What is assembly language?

An assembly language is a programming language that enables a programmer to use human-readable text to write machine code.

From a stackoverflow comment:

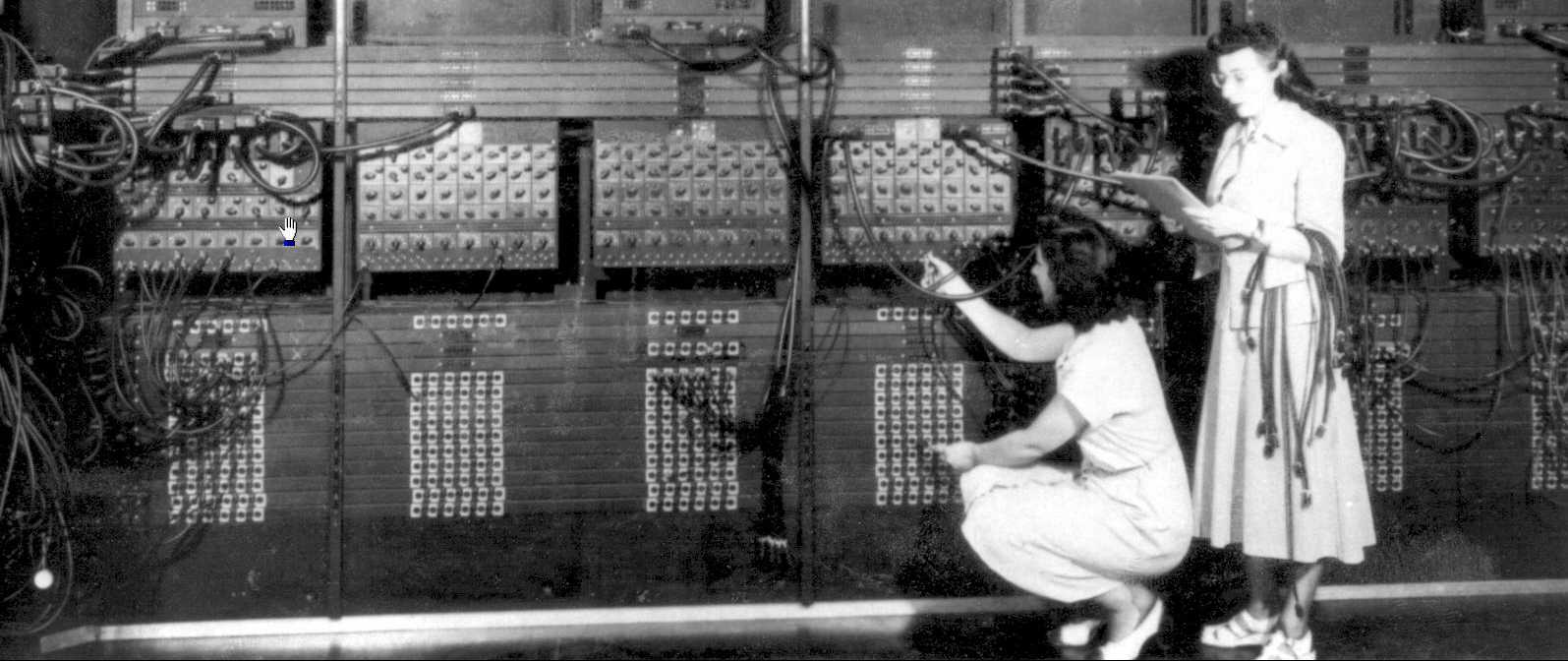

My grand father was working with a Z1. The modus operandi was to write down your code in assembly language on paper and the secretary would actually do the transcription (to binary) to punch cards, then pass them to the machine operator and the result would be handed back the morning after.

In particular, the first assemblers were human!

Here are some assemblers at work.

When programming modern computers, these details, which are specific to the hardware are hidden from the programmer in low level languages such as Intel X86.

High level languages are designed to allow humans to efficiently write powerful programs. Ideally, they do not require the programmer to think about hardware details, that is, the same code should work on many different types of hardware (Intel vrs AMD, 64-bit vrs 32-bit, etc.) and operating systems (Linux, Windows, Mac).

High and low level languages

Compiled vrs interpreted languages

Besides high vrs low level languages, there is a further distinction.

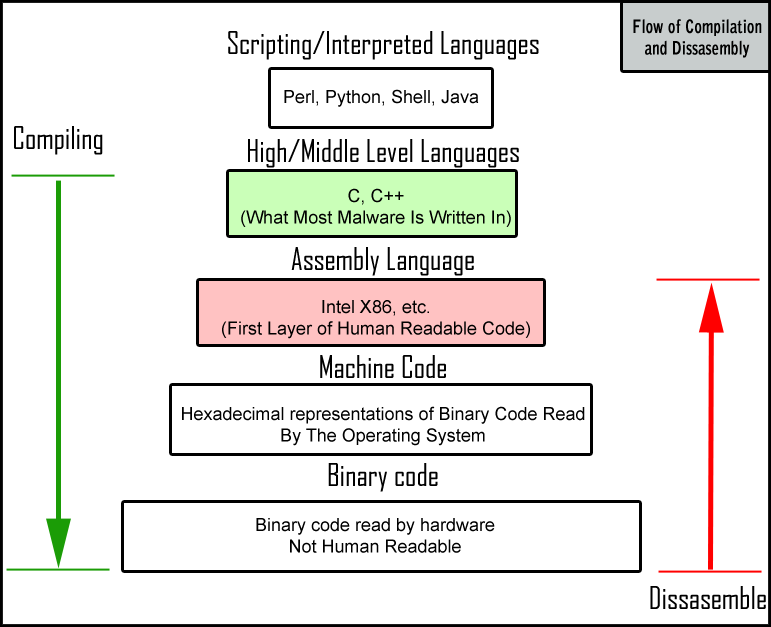

Roughly speaking, compilation is the process of converting human readable code such as C++ into binary instructions. Compiled languages require this step when programming: first code, then compile, then run the compiled code as many times as you want.

Interpreted languages run inside of a program that interprets the commands and executes them immediately. Since the conversion to binary step is happening everytime you run the code, these languages are generally slower, although there are tricks (e.g. using these Python to wrap C++ or Fortran code) that make this a non-issue in some applications.

Other examples of popular compiled languages

- Fortran

- Go

Congratulations, you've competed essential computer literacy!

You now know the key concepts that make modern computers work and allow us to program them.